Follow us :

December 08, 2020

8 minutes

This is about the “Crawlers Gonna Rate” webinar recap, which we streamed it last week.

When you read the webinar’s title, you may imagine some slow-moving creature low to the ground crawling, but it’s definitely not about it.

Last week, DeepCrawl’s Clara Watt and I (Roman Adamita) made a webinar regarding how to get the most from DeepCrawl insights and features, some crucial SEO issues, and how to deal with them. Additionally, you will get a discount code that you can applicate for monthly or annual plans.

Firsts things first, let me start with the webinar’s replay and the deck.

Parts of the webinar:

- 0:00 – Let’s get to know each other

- 1:25 – Who is Clara Watt?

- 2:08 – Who is Roman Adamita?

- 3:17 – Webinar Contents

- 3:55 – Introduction to DeepCrawl

- 14:40 – DeepCrawl’s Competitor Landscape

- 16:40 – Common SEO Issues & How to deal with them (based on experience)

- 32:40 – Pagination Pages & How to get better performance from them (bonus)

- 39:38 – Quick Q&A

- 41:18 – DeepCrawl’s Insights and Features

- 49:18 – Recommended Filters (Some of the customized valuable filters that you might find useful)

- 51:35 – DeepCrawl Discount Code

- 52:14 – Thank you!

So, let’s continue with some of the fascinating insights and methods that you might read this recap for this reason. And by the way, down below, you will get many more recommendations than I gave on the webinar. I hope you will also enjoy this blog post, and thanks for your interest in it.

[toc]

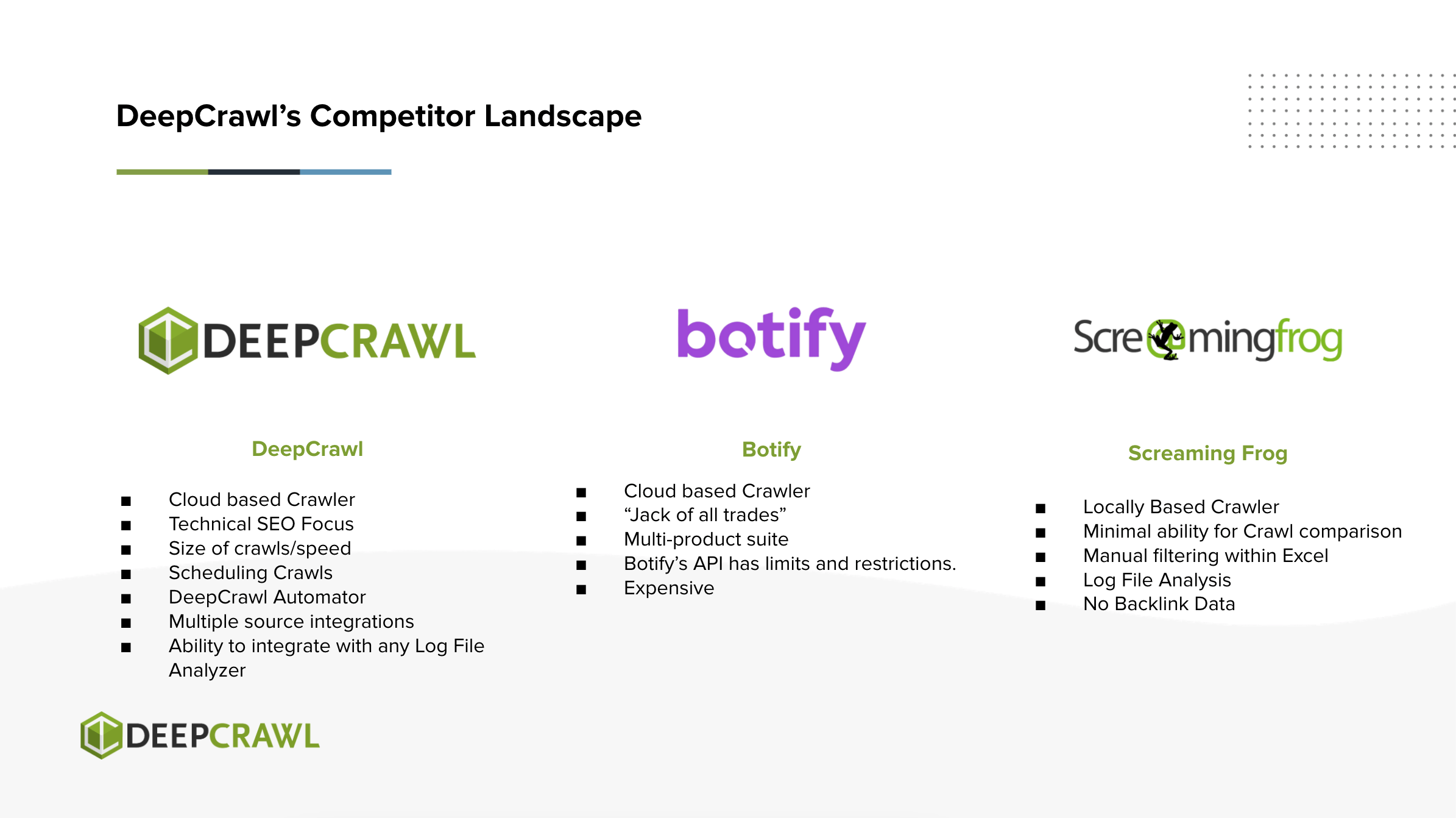

DeepCrawl’s Competitor Landscape

Clara Watt:

The first one, Botify, similar to DeepCrawl, is a cloud-based crawler. We consider them a jack-of-all-trades, which means that they focus on producing a multi-product suite. Compared to DeepCrawl, we have a technical SEO focus, and we want to invest in what we do best.

Clara Watt: Screaming Frog is on the other end of that but is a locally-based crawler. The benefit of a cloud-based crawler is that nothing is actually saved on your computer. So this allows naturally for bigger and faster crawls, which DeepCrawl prides itself on and something that we’ve been working on quite a lot.

Most Common SEO Issues & How to Solve Them

Based on my SEO experience of more than seven years, I want to share some ideas regarding how to solve some of the worst technical and content SEO issues.

Thin Content & Empty Pages

An example of a test page that is thin and indexable.

Amazon Thin Page Example (with JavaScript)

Amazon Thin Page Example (without JavaScript)

Let’s make it clear. Thin content it’s not always a page with thin content. Sometimes a short answer is better than a long and boring one. But in many cases that I faced with, every website has at least a few thin pages. The above screenshots have been taken from two different websites: Kofana, and another one is Amazon.

In the above example, Kofana has a really page with thin content. Yes, it is one of our projects, and we are working on it. But everyone must know that: don’t break your test pages launched and indexable.

According to the top Google searches in 2020, Amazon gets 350.7 million search volume globally. Yes, this website is top 5 most searched in a month. But it’s not a reason to not get hurt from Google’s core updates. The authority of the domain is just a little metric of hundreds. Thanks to Kevin Gibbons, according to their organic visibility report, I saw that Amazon is the biggest loser after Google’s December Core Update.

How to fix thin pages?

- Just delete or make “noindex” all of them. In case of those are really thin and unnecessary.

- Make the canonical tag to more quality and user-friendly page (or 301-redirect there). In that way, thin pages will be deindexed, and their authority will be moved to another page.

- Enrich your content if it is needed. It’s costly, but if you consider a potential for the future, don’t hesitate to make a better context because better content is equal to better visibility, even on paid search results.

Also, in this blog post, you will find more specific details on how to solve thin content on e-commerce websites.

Orphaned Search Console Pages

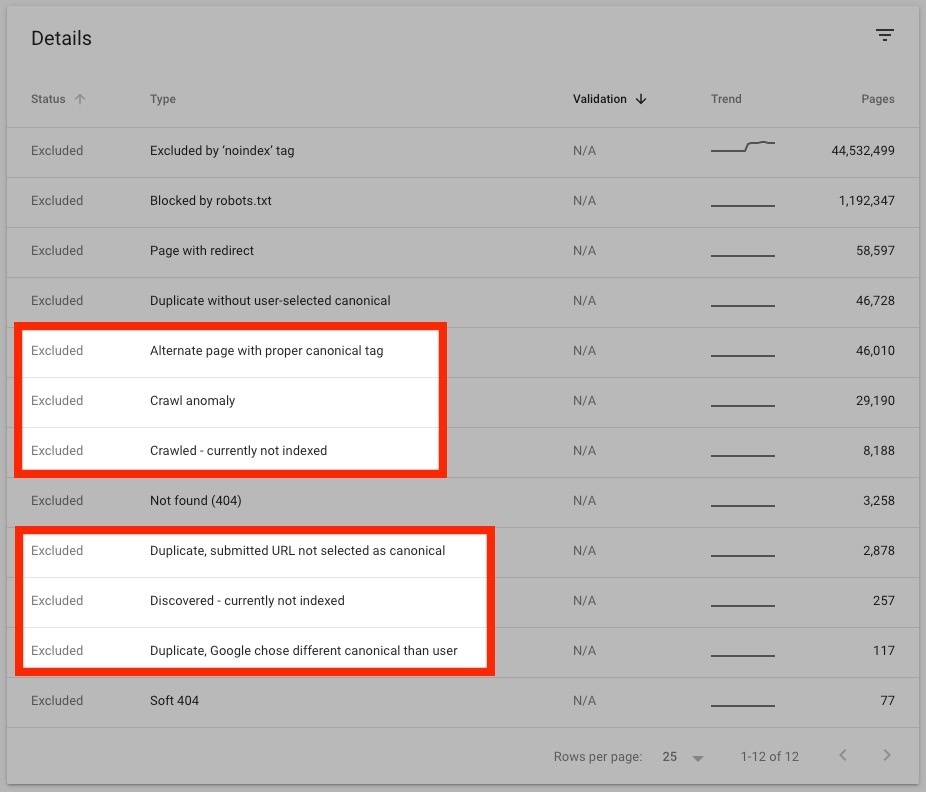

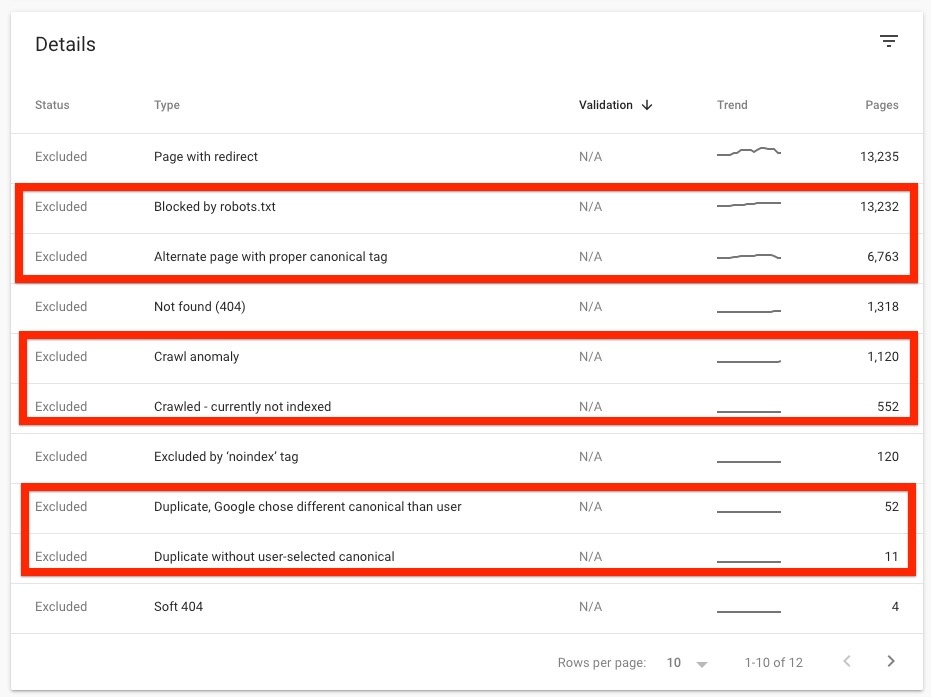

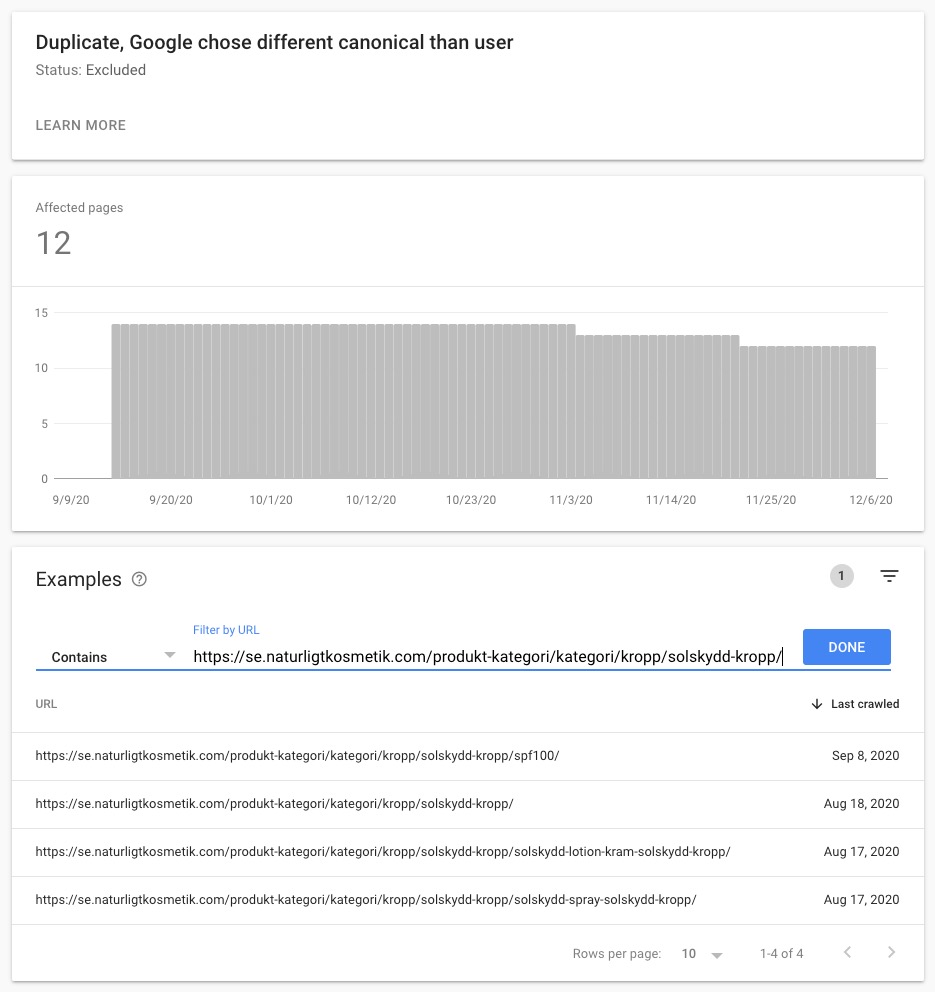

Google Search Console > Coverage > Excluded, Details

Uh oh. I’m not too fond of kind of these issues a lot. “Orphaned” means that must be an index issue while Googlebot crawling your pages. When you connect Google Search Console to DeepCrawl, this issue will appear if it’s listed on the Search Console’s Coverage report.

- Crawl anomaly

- Crawled – currently not indexed

- Alternate page with proper canonical tag

- Duplicate, submitted URL not selected as canonical tag

- Duplicate, Google chose a different canonical tag than the user.

If you are faced with the above ones in your Coverage (Excluded) report, it may be because of these issues.

What do I recommend to solve orphaned Search Console pages?

- Check the page speed of those pages.

- Check the rules included in the robots.txt file.

- Check the canonical tag if it’s the same as the URL.

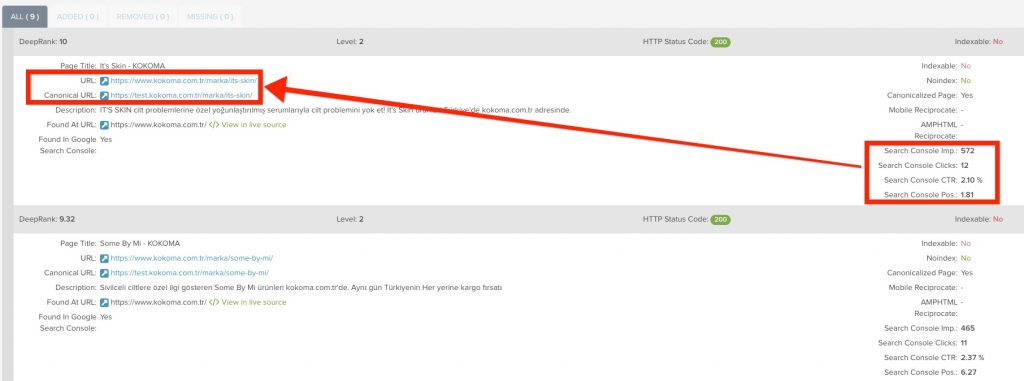

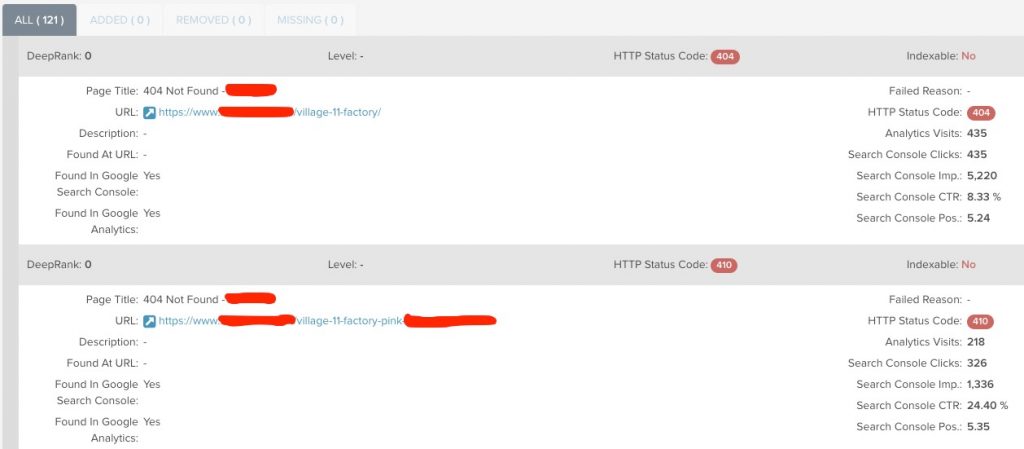

Non-Indexable Pages with Search Impressions

DeepCrawl Project Dashboard > Non-Indexable Pages with Search Impressions.

Google Search Console > Coverage > Excluded, List Details

Pages like this usually were indexed and got impressions from Google organic search results. These impressions were included in your Search Console property, and you see this issue in DeepCrawl just because these pages were somehow deleted from Google. Sometimes it happens; it’s really very common. But because it’s widespread, you shouldn’t leave as it is.

Usually, these non-indexable pages with search impressions are hidden in the below reports from Coverage.

- Crawl anomaly

- Blocked by robots.txt (maybe accidentally)

- Alternate page with proper canonical tag

- Crawled – currently not indexed

- Duplicate, submitted URL not selected as canonical tag

- Duplicate, Google chose a different canonical tag than the user.

How to deal with deindexed pages with search impressions?

- Be sure the canonical tag is the same as the URL.

- Check your page by disabling javascript (you can try Chris Pederick’s extension Web Developer).

- Check your server connection time to those pages.

Broken Pages with Traffic / Backlinks

DeepCrawl Project Dashboard > Broken Pages with Traffic.

My every time’s impression when I face this issue: Why are you still a thing that exists on this planet?

After all, this is a very antique issue, but it still exists on most websites; even Google has it. Next year in May 2021, Google will be rolling out the algorithm based on search signals for page experience. So this issue can hurt your page experience. Let’s get prepared.

How to resolve broken pages with traffic or backlinks?

- Be aware of every page that gets traffic. Analyze them by using some of the best tools like DeepCrawl, Screaming Frog SEO Spider, etc.

- Be sure that you are redirected to a 200-OK page. You may have old redirects that are now switched to an error page.

- And don’t forget about canonical tag and XML sitemap. Make your sitemap work dynamically. Nothing but 200-OK pages should be included there.

Pages without Canonical Tag

If I wrote a dialog about this, it would be like this one:

A website:

– Mirror mirror…

Google Mirror:

– What’s up?

A website:

– Tell me, which of these pages have the highest rank potential in the entire website?

Google Mirror:

– I don’t know even who you are.

So yeah, pages without a canonical tag are like a person without a passport. Canonical tag has a reason to be in the head of your source codes, and the reason is to show that the page is unique and has its origin.

The only thing I recommend you to do in this case is to enter a valid URL into the canonical tag.

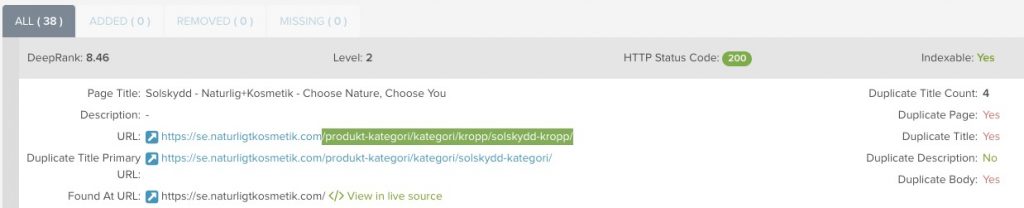

Pages with Duplicate Titles / Descriptions

DeepCrawl Project Dashboard > Duplicate Titles.

Google Search Console > Coverage > Excluded > Duplicate, Google chose different canonical than user

Did you notice something in this blog post? Take a look at subtitles, which start with “how-to,” and come back here; I still need your attention.

I can count dozens of reasons why your website has duplicate meta titles or descriptions, but I’m not going to do that in this blog post. I can tell you that you must be aware of your page title and description. It keeps your website’s pages unique and indexable. Otherwise, you will have some indexing issues with your duplicate pages because Google doesn’t give a (…big keyword here…) about them. Googlebot does not prefer pages that are duplicates. So it will be ending with erasing those kinds of pages from organic search results. If you don’t like it, you have to do some on-page optimization.

How to make duplicate pages unique?

- Delete and redirect unnecessary pages.

- Customize your metadata (meta title and description). Research your pages’ unique keywords and use them in there.

- Invest more in content. Content always was being consumed, from the Stone Age to the Web. It will always consumed. There is no escape.

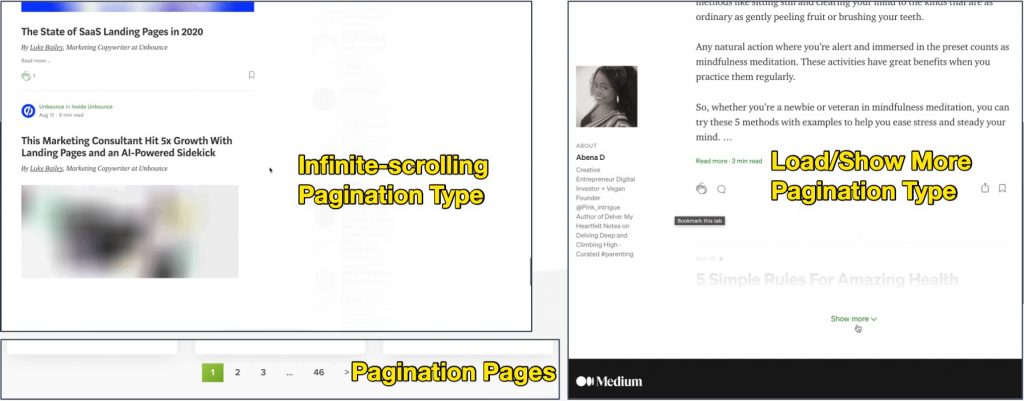

Pagination Pages

Before blaming me for a reason, I will tell you below, just read and do some meditation about it. I assure you, there’s no anything bad with that. I know you are curious, what is it about, man.

The problem behind pagination pages:

- They are indexable and duplicate. Yes, even when you include ” – Page 2″ at the least of the pagination page’s meta title.

The solution of duplicate pagination pages (2 options):

- Make the canonical tag to the first page. Excluding the first page, other ones will be deindexed, the authority and entity will be moved to the category’s first page.

- If the canonical of the 2nd page is SantaClaus.com/wooden-christmas-sleigh?page=2, then make it SantaClaus.com/wooden-christmas-sleigh. As well as for the next pagination pages.

- Customize metadata for every pagination pages. It will cost your time, but you will get the most of your pagination pages.

- 1st page’s title and meta title: Coffee Cups – Brand

- 2nd page’s title and meta title: Coffee Mugs – Brand

- 3rd page’s title and meta title: Buy Coffee Mugs at The Best Price – Brand

The reasons I gave you this method:

Why 1st option?

- As I mentioned before, duplicate titles are not a great idea.

- You will never see the second or third page of the category in the Top 10 search results. The most important page is your category’s first page. You created almost everything for this page. So Google knows that, and their methodology is to bring the best results to the users.

- You will be fantastically cooling your crawl-budget.

- Remember that a year ago when Google choose to retire the importance of the rel=”next” and “prev” by announcing it on Twitter. I’m pretty sure Googlebot understands if there’s pagination or not in your pages without indexing them.

Why 2nd option?

- Your website can get more visibility from your pagination pages. Just research the best match keywords for your categories.

- Your website will have just unique pages to be a big plus for your index visibility compared to your competitors.

I hope one day these recommendations will bring you success!

So, in a nutshell;

Behind every issue, there must be a short or long-term solving strategy. Don’t guess it; test it every time!